Scraping Web Data into TigerGraph with Tor

- Blog >

- Scraping Web Data into TigerGraph with Tor

by Linxiu Jiang originally posted on Medium

1. Introduction

This blog will introduce the scraping demo I have recently done, and drill into some interesting ideas about encryption and decryption.

For the Scraping demo, I use Request library and Tor proxy to scrap user subscription information based on video platform www.bilibili.com, and then feed the data to TigerGraph database for relationship analysis between users. For encryption and decryption, I will introduce the Tor Network fundamental mechanism which is a very intuitional way to understand the concepts.

If you are interested in these contents, keep reading!

2. Scraping Demo

2.1. Anti-anti-spider

If you attempt to scrape data from web pages, there are many anti-spider mechanisms that could prevent you from information access. Before we start to deal with the bans, we should be aware of the most common anti-spiders as follows.

Async IO, User-Agent, Animating Authentication

Access per unit time, access volumeAsync IO : users need to do something like scrolling the mouse to get the new response on the same webpage.

User-Agent: this will be used in the client request header

Animating Authentication: some servers assert if a user is a headless robot by playing simple games with it.

Access per unit time and access volume can be easily controlled, so I won’t give extension explanations.

2.2. What factors should be considered when selecting a crawler?

How to be anonymized? or what factors should be considered when selecting a crawler? The key is simple — choose the one that can match your requirements. For my requirements, I compared two common scrapers as follows.

· Selenium scraper

· Requests library + TorSelenium scraper is an automation test tool. It supports JS capabilities, which means it can help scarp data by simulating the browser actions.

Request Library + Tor: Request is a kind of traditional crawler. It helps scrape data by simulating the HTTP requests. Tor is a peer-to-peer proxy, it relays networks through computers to create a node of proxies so that it can avoid bans from the Server.

Basically, this scraping demo is based on www.bilibili.com, whose data is very friendly to access. So I choose Requests Lib + Tor as my scraper.

2.3. What kind of configurations are needed for Tor? How to scrap a web page with Tor?

2.3.1. Configurations:

To scrape data from website using Tor, we need to configure the following two important things:

AJAX Request, User-AgentAJAX Request: in this case, we set aside “pn” in the URL

User-Agent: use Tor proxy to change the header information in the request

2.3.2. Tor methods:

Use requests library to request a page

request.get(url).textUse a proxy to request a page

proxies = {

'http' : 'socks5://127.0.0.1:9050',

'https' : 'socks5://127.0.0.1:9050',

}

requests.get(url, proxies=proxies).textUse stem to renew IP address

def renew_ip():

with Controller.from_port(port = 9051) as controller:

controller.authenticate()

print("Success!")

controller.signal(Signal.NEWNYM)

print("New Tor connection processed")Use fake_useragent to change the user agent

headers = {'User-Agent': UserAgent().random}

requests.get(url, proxies=proxies, headers=headers.textUse Cron for Automation

wait = random.uniform(0, 2*60*60)

time.sleep(wait)2.4. Implementation with python

First step: request to get the total information in one web page. The webpage has several limitations — one is volume limitation. We can only access the first 5 pages. The other is Asynchronized IO.

We can check the access volume limitation by this:

if req.json()["code"] == 22007: # limitation error for page accessThe Asynchronized IO can be solved by this:

tbc…

# Request to get the total url = 'https://api.bilibili.com/x/relation/followers?vmid={}&pn=1&ps=500&order=desc'.format(channel_id) req = requests.get(url,headers=header)total = req.json()["data"]["total"] print(len(req.json()["data"]["list"]))

There is one more limitation: the access should be executed by a logged-in user. To solve this problem, we can check the status_code before we ask for the JSON object from the webpage. If status_code = 200, then we have successfully logged in.

if req.status_code == 200: # user has successful logged inSecond step: access the first 5 pages and parse the DOM tree to get the information that we want. Now, we have gotten all the DOM tree. To extract the specific data, in this case, user id, name, avatar, follow time, we need to deal with the JSON object from the webpage.

fans = req.json()["data"]["list"] # get json object from requestfor fan in fans: do something...(extract, output and so on)

Final step: store the data into files. TigerGraph supports both .csv and .json files. In this case, we use .csv to store the data.

# header row fansfile.write('"id","name","avatar"\n') #write in header row followsfile.write('"from","to","sub_date"\n') #writer in header row# vertex csv file fansfile.write('"{}", "{}", "{}" \n'.format(fan["mid"], fan["uname"], fan["face"]))# edge csv file followsfile.write('"{}", "{}", "{}" \n'.format(fan["mid"], channel_id, datetime.fromtimestamp(fan["mtime"])))

Here are the output files:

2.5. Feed data into TigerGraph

If you are not familiar with TigerGraph, just check Akash’s blog https://www.tigergraph.com/blog/getting-started-with-tigergraph-3-0/ to learn.

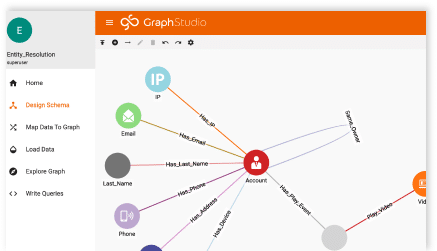

2.5.1. Build schema and create the graph

Person vertex holds attributes: id, name, avatar Subscribe_to edge holds attribute: sub_dateSchema: person –(subscribe_to)-> person

2.5.2. Load data from CSV files

2.5.3. Explore the Graph

Now you can explore the Graph using queries or Graph Studio!

3. Encryption Exploration

Let us drill into my favorite part of this blog — encryption and decryption.

In the first part, we mentioned Tor Network. Tor Network provides a smart way to encrypt users’ performance against spying. However, this process still has vulnerable components, which means even if you are diligent to build the onion network, your performance still can be spied somewhere in it. Looking into the Tor mechanism can help us find the reason.

In a normal way, when you connect to the internet, the server or the spy can know who you are and what you perform by the IP address you attach in your requests.

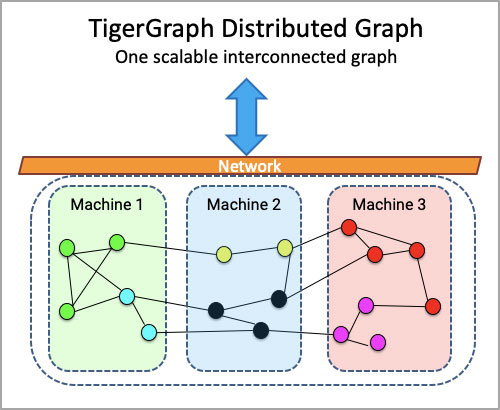

In Tor Network, the client data including IP address will be encrypted and go through multiple relayed points (proxies). Only the client itself holds the keys to the encryptions. When a client sends requests to the server, the encryptions will be peered step by step until they arrive at the server. When the server tries to respond to the client, the encryptions will be added onto the response data step by step until they arrive at the client. The client will decrypt the data with keys.

According to this picture, we can find out data can be protected from spy when going through the traffic line. The reason that data can be protected safely is that each relayed point is isolated, they do not know each other at all. The only information they hold is the input and output encrypted data. However, the components “1” and “2” are vulnerable. If some hacker puts his spies on these two positions, the client’s data can be accessed easily. He can also digest and compare the time signals between these two positions. (This is another story)

If you have any questions or suggestion, please check the whole project on my GitHub or contact me with LinkedIn: https://www.linkedin.com/in/linxiu-frances-jiang-961986117?lipi=urn%3Ali%3Apage%3Ad_flagship3_profile_view_base_contact_details%3BuFR2ggkDTOKCiS6focX2PQ%3D%3D