Graph Neural Network-based Graph Outlier Detection: A Brief Introduction

- Blog >

- Graph Neural Network-based Graph Outlier Detection: A Brief Introduction

This blog is written by Yingtong Dou, a Ph.D. candidate at the University of Illinois Chicago, working on graph mining, fraud detection, and secure machine learning. The content of this blog is based on his recent paper and a tutorial at Machine Learning in Finance workshop at KDD 2022.

This blog will introduce the basic mechanism of graph neural networks and the concepts and methods in unsupervised node outlier detection on graphs. Some findings and thoughts in this direction are also shared. Last, I will introduce a GNN-based graph outlier detection library (PyGOD) and its integration with TigerGraph ML Workbench.

Graph Neural Networks

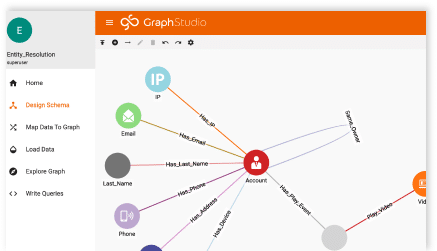

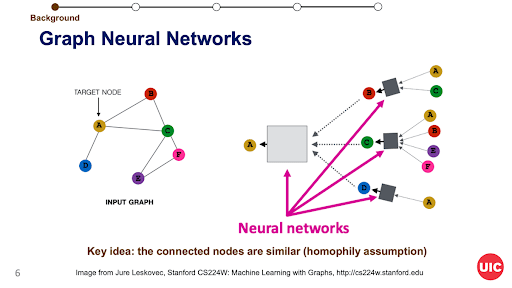

The graph neural network (GNN) has recently become a dominant and powerful tool in mining graph data. Like the CNN for image data, the GNN is a neural network designed to encode the graph structure and learn a node’s embedding via iteratively aggregating its neighbors’ embedding (see Figure 1). Most GNNs hold the homophily assumption that the connected nodes are similar; therefore, aggregating the neighbors’ information would help learn a more informative center node representation. The center node representation can be used for downstream tasks like node classification, link prediction, and outlier detection (OD).

Outliers on Graph

The outlier is a sample that is significantly different from the remaining data. As a mainstream research direction in data mining research, outlier detection is also crucial in the industry. An outlier in real-world data usually indicates fraudulent behavior, system error, network intrusion, or network failure. Those outliers may result in significant financial loss and security issues.

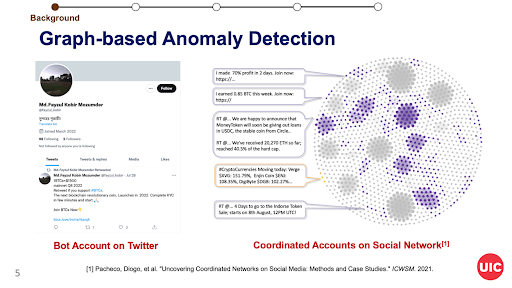

Besides the outliers in traditional tabular data, the graph model could elevate the outlier detection performance, especially when data instances share common properties and have proximities. As Figure 2 shows, the bot account is less suspicious individually, but its co-retweet is densely connected, which can be easily spotted from the graph perspective.

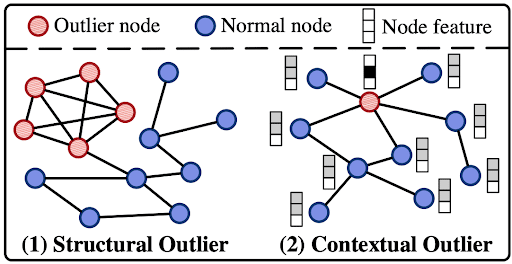

In graph outlier detection, two typical types of outliers are defined and studied by previous literature. According to Figure 3, (1) structural outliers are densely connected nodes in contrast to sparsely connected regular nodes, and (2) contextual outliers are nodes whose attributes differ significantly from their neighboring nodes. The structural outliers are illustrated above, in Figure 2. The contextual outlier depicts nodes dissimilar to their neighbors in the graph, e.g., compromised devices in the computer network. Its definition is like the assumption of the outlier in the classic proximity-based OD methods.

GNN-based Node Outlier Detection

Before the advance of the GNNs, matrix factorization, density-based clustering, and relational learning methods are leveraged to encode the graph information and identify outliers. More non-GNN graph OD methods can be found in this comprehensive survey.

Let us come back to GNNs. After obtaining the node representations, the GNN is optimized using different loss functions (objective functions) for different tasks. For instance, a cross-entropy loss is used to optimize GNN for the node classification task.

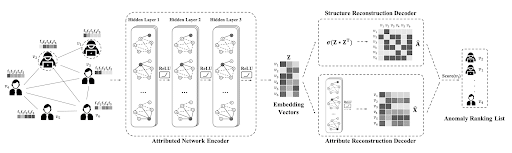

For node outlier detection, the common practice is to integrate GNNs into the auto-encoder, where the GNN is used as the encoder and decoder. This neural architecture is called graph auto-encoder (GAE). Like vanilla autoencoders, the GAE encodes the graph information by reconstructing the graph data, i.e., reconstructing node features and edges. In terms of outlier detection, GAE can be used to encode the normal graph information, and the node with a high reconstruction error will indicate its outlier degree. Figure 4 shows the first model using GAE for node outlier detection.

Note that using GAE for outlier detection has two implicit assumptions for the graph data: (1) the outliers only take a small amount of data, and the majority of the data is normal; (2) the normal data share common attribute and structure properties. With this assumption, the GAE can be leveraged to detect structural and contextual outliers, and there have been many variants of GAE in the recent two years.

Findings from Benchmark

Next, I will share some valuable findings from our recent benchmark for GNN-based node outlier detection methods:

- Many existing GNN-based OD methods are developed based on synthetic outliers with the relatively naive assumption for the outliers; thus, many do not have an ideal performance in detecting organic outliers in the wild. The organic outliers are usually complicated, and their distribution may be diverse. Nevertheless, our benchmark shows that the GNN-based OD methods will be effective if the organic outliers follow the predefined outlier types.

- Like most deep learning methods, the GNN-based OD methods will be sub-optimal on small graphs. At the same time, most GNN-based OD methods are not scalable on large graphs with tens of millions of nodes.

- The performance of unsupervised GNN-based OD methods relies heavily on hyperparameters, and the hyperparameter tuning under unsupervised learning remains a challenge in machine learning research and practice.

- Most GNN-based OD methods prefer a specific type of outliers. It is not easy to balance and optimize the detection performance for each outlier type. Meanwhile, no method has consistent performance or outperforms other methods across different datasets in expectation.

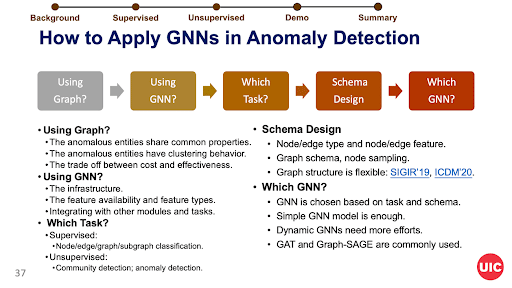

A Guideline for Graph-based OD

Based on the above findings, we argue that there is still a gap between the GNN-based OD and industry application due to its scalability constraints. Developing automatic, scalable, and task-oriented GNN-based OD methods would be a promising direction. As for applying GNN-based OD or graph-based OD, I give a guideline in the following figure to facilitate practitioners.

From the above guideline, I want to highlight that the exploratory analysis of the data and a precise problem definition are crucial for applying graph-based OD.

PyGOD and TigerGraph ML Workbench

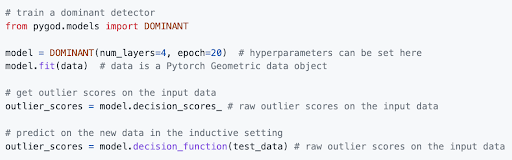

Last, I would like to introduce, PyGOD, a Python library developed along with our graph OD benchmark. The library is developed based on PyTorch and PyTorch Geometric (PyG) and the API style follows the popular ML library scikit-learn, where we can easily detect outliers on graphs with five lines of code:

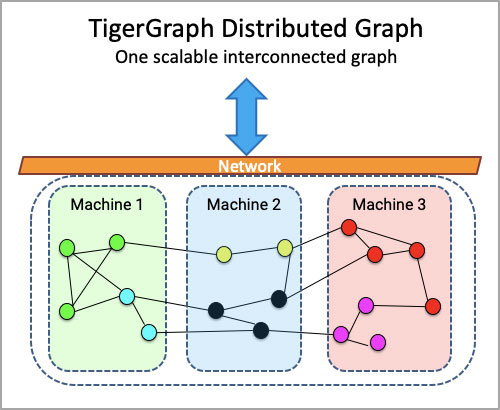

The PyGOD is continually developed toward covering more detection capabilities and higher scalabilities. Thanks to the TigerGraph ML Workbench, which enables the graph data transformation from TigerGraph DB to PyG data object, the PyGOD can be easily installed and tested on TigerGraph.

Please follow the tutorial and this jupyter notebook for instructions on running PyGOD under the TigerGraph environment.